What is TFLite?

While machine learning approaches have revolutionized the world of computer vision, they have also only continued to become more computationally expensive. To combat the prohibitive nature of machine learning algorithms, TensorFlow, one of the machine learning world’s mainstay machine learning libraries, has introduced TensorFlow Lite (TFLite) to make machine learning models more accessible at inference time. TFLite is a library crafted to enable on-device machine learning, such that mobile, embedded, and edge devices can run highly performant models on their own.

TFLite models are fundamentally based on the majority of core computation operators in the TensorFlow library, and use a special efficient portable model file format, FlatBuffers, and indicated by the .tflite file extension. As such, TFLite has more limited computational capabilities, which it trades for lightweight, highly optimized performance.

When Should You Consider Converting TensorFlow Models to TFLite?

TFLite’s primary focus is to target several key limitations of on-device machine learning: latency, privacy, connectivity, memory, performance, and power consumption.

- Latency: Full size machine learning models are often too difficult to handle entirely on-device, so users might choose to host the model on the cloud or on a local server. However, this means that making prediction requests from the model will have added latency due to the remote nature of model hosting, as compared to on-device inference.

- Memory Usage: Typical TensorFlow models can easily get so large that they cannot be run or even stored on certain edge devices. TFLite uses two strategies to reduce memory usage. The first strategy is to massively reduce model size through the facilitation of various model compression and storage techniques. The second strategy is to reduce and provide more accurate limits for memory usage during computation. This allows less computationally powerful devices to use machine learning models as well as free up space for the device to perform other necessary functions.

- Connectivity: When one uses remote model hosting for inference, there is the additional requirement of internet connectivity. While this might sound like a simple ask, for contexts in which devices are expected to be able to operate independently and without additional setup, such as wireless internet connectivity, this can be a debilitating constraint.This is crucial for tasks like legged robots and drones mapping terrain in remote areas, or even in outer space. Removing this requirement can ease restrictions in setup.

- Privacy: Data privacy is an increasingly important topic, and another necessary component of computer vision model pipelines. When additional external components such as external model hosting or API requests are made, certifying and maintaining data privacy becomes much more difficult. When inference can be made offline on a single device, no personal data leaves the device and privacy is a much smaller issue.

- Reduced Power Consumption: Power consumption is a growing concern both operationally and environmentally. If the edge device is independently powered such as through a battery, power is a limited resource that should be preserved when possible. Environmentally, the carbon footprint of machine learning model training and inference is coming under greater scrutiny. When memory storage and usage is made more efficient, power consumption is similarly reduced.

There are other technical differences between TFLite and TensorFlow. Since TFLite is optimized for performance in inference, once a model is in the .tflite format, it cannot be modified. This means that only TensorFlow models can be retrained or modified and TFLite models should only be used when one is firmly in the deployment step of the machine learning pipeline.

Not all of these limitations will necessarily apply to every user’s situation, and the limitations may vary in flexibility. For example, if connectivity isn’t possible, this completely rules out the possibility of a remotely hosted server. However, for limitations like memory usage and power consumption, the decision may change based on the specifications of the hardware and use case. A microcontroller would not be able to host most TensorFlow models, but a regular laptop has sufficient hardware capability for at least a few options. Therefore, one should carefully consider which aspects might have the most impact on their use case.

How Do You Export Your Trained Model in TFLite Format?

Currently, the official suggested TensorFlow methods for generating TensorFlow Lite models are either to use an existing TensorFlow Lite model, create a TensorFlow Lite model using the TensorFlow Lite Model Maker, or converting a TensorFlow model into a TensorFlow Lite model using the TensorFlow Lite Converter. Each of these has its own limitations. For one, if you use an existing model or create a TFLite model, it may not be able to have the model complexity that you require. If you try to use the converter, it may not work depending on what TensorFlow functions you may have used.

With Datature’s Nexus platform, object detection models, from the most basic to complex, that are trained on Nexus can all be converted to TFLite.

Datature stores trained models on Nexus as artifacts in the Artifacts section. The strategy in which they are stored from model trainings can be decided in the workflow training options. When you want to export your model as a TFLite model, you can first go to your project page and access the Artifacts section. You should then select your preferred model, and select generate TFLite. Once the file is prepared, you can download the model or use the model key using Datature Hub for your own custom prediction.

What Are Some of the Use Cases of TFLite Models?

Once you have the TFLite model in hand, you now have a wide variety of resources to support your deployment. TFLite models can be deployed on a wide variety of devices, from laptops to even microcontrollers. Below is an example of an ESP-32 microcontroller that performs image classification based on images captured on its onboard camera.

They are supported on multiple platforms and languages such as Java, Swift, C++, Objective-C, and Python. Additionally, there is support for out-of-box APIs using the TensorFlow Lite Task Library or build custom inference pipelines with the TensorFlow Lite Support Library. It is also easily possible to use TFLite for Android devices as well.

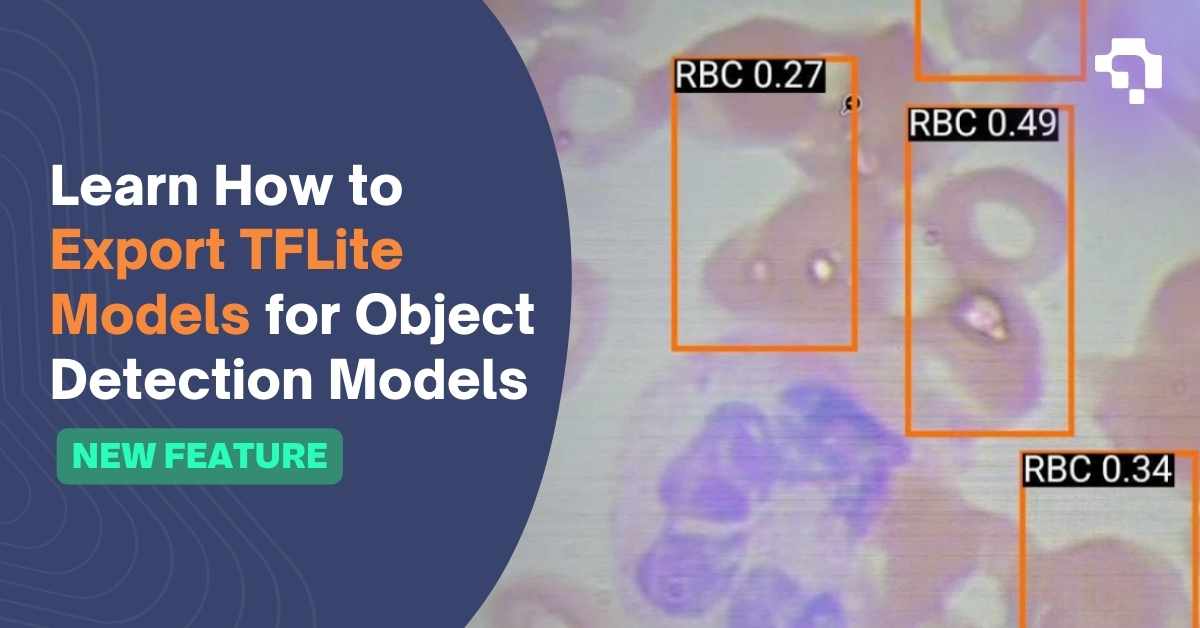

As a practical example of how TFLite can be used, we used the quickstart tutorial Android app provided by TensorFlow and used a Nexus trained model exported as a TFLite file to capture the following. To reproduce the following example, you can simply follow the installation steps in the link above and swap the app’s model file to a custom TFLite model exported from Nexus. Below, we have an example of a prediction being made on live video data being captured by a phone camera by a red blood cell object detector that has been deployed on an Android device.

Overall, TFLite provides a flexible and powerful platform for integrating computer vision into a wide range of mobile and edge applications. Its support for a wide range of model architectures and its emphasis on performance and efficiency make it an ideal choice for developers looking to bring the power of machine learning to their applications.

Additional Deployment Capabilities

Once inference with your new TFLite model is functioning well, you can also use TFLite models on edge devices such as a Raspberry Pi. With TFLite models on edge devices, you can tackle a wide variety of tasks. If latency is not an urgent priority, you can also consider platform deployment with our API management tools, where you can always alter the deployment’s capability as needed.

Our Developer’s Roadmap

While our deployment suite is extensive, we plan to include more model types to be inclusive of all different types of use cases. To make Datature Inference more versatile, we have added compatibility with other edge deployment formats such as ONNX and will continue to further this push for cross-compatibility. This will allow Datature Inference to serve a wider suite of devices and applications.

Want to Get Started?

If you have questions, feel free to join our Community Slack to post your questions or contact us about how TFLite deployment fits in with your usage.

For more detailed information about the model export functionality, customization options, or answers to any common questions you might have, read more about model export on our Developer Portal.

.png)